To evaluate the performance of the change detection model, besides manual visual comparison, we can quantify differences between model inference results and ground truth labels using evaluation metrics such as F1 score, IoU, Recall, and Precision. In practical applications, multiple evaluation indicators should be combined to comprehensively assess model effectiveness based on specific detection tasks and metric implications.

SuperMap iDesktopX provides Model Evaluation functionality supporting accuracy assessment for image Object Detection, Binary Classification, Multiple Classification, and Detect Common Change models.

Main Parameters

- Function Entry: Toolbox-> Machine Learning-> Imagery Analysis-> Model Evaluation tool.

- Inference Result: Select vector data for model evaluation. This can be output from Object Detection/Detect Common Change models, or vectorized results converted from Binary Classification/Multiple Classification raster outputs.

- Real Label: Ground truth vector dataset for comparison with inference results.

- Model Type: Supports Object Detection, Binary Classification, Multiple Classification, and Detect Common Change.

- Inference Result Category Field: Specifies the category field in inference result vectors. Not applicable for Binary Classification and Detect Common Change. If "None" is selected, the system uses the value field. If no valid field exists, all records will be treated as a single category.

- Real Label Category Field: Specifies the category field in ground truth vectors. Not applicable for Binary Classification and Detect Common Change. If "None" is selected, the system uses the value field. If no valid field exists, all records will be treated as a single category.

- Overlapping Threshold: IoU threshold for determining correct object detection bounding boxes. A prediction is considered correct if IoU exceeds this threshold (range: 0-1). IoU = intersection area / union area of predicted and true bounding boxes. Applies only to Object Detection. Default: 0.5.

- Result Data: Output table storing evaluation metrics. Specify datasource and dataset name.

- Run: Click Run to execute the evaluation. Execution progress will display in the Python window.

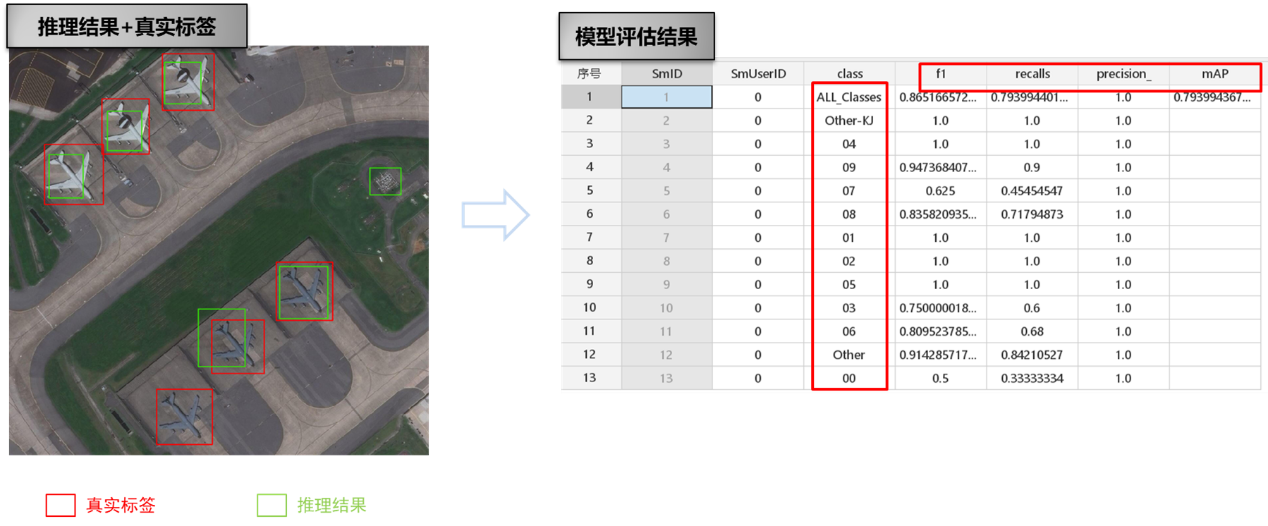

The following figure shows model evaluation results for aircraft object detection using this tool.

Related Topics

Machine Learning Environment Configuration

Machine Learning Environment Configuration