The purpose of model training is to conduct neural network model training using generated training data, and iteratively evaluate models according to set parameters to obtain available neural network models.

SuperMap has built-in open-source frameworks such as TensorFlow and PyTorch, training machine learning or deep learning models with different dataset categories. The overall training procedure obtains network models with better training results through multiple epochs, utilizing pre-training models based on large-scale foundational training data to reduce training time. Improve model training efficiency and accuracy through hyperparameter tuning (learning rate, batch size, etc.). The desktop-side training tool is suitable for short-cycle model training.

Main Parameters

- Function Entry: Toolbox-> Machine Learning-> Imagery Analysis-> Model Training tool.

- Training Data Path: Select the result folder generated by the Generate Training Data tool, which contains image and label training data.

- Purpose of Training Model: Provides six applications: Object Detection, Binary Classification, Multiple Classification, Scene Classification, Object Extraction, and Detect Common Change.

- Model Algorithm: Select appropriate model algorithms according to the model's purpose. Default values are recommended algorithms for different purposes; users can modify or choose custom algorithms.

- Training Config File: The training configuration file (*.sdt) required for training must match the selected algorithm in Model Algorithm. When selecting built-in algorithms, parameters automatically match config files in resources_ml/train_config; for custom algorithms, users need to specify corresponding config files.

- Training Times: Number of epochs (full training dataset iterations). Default is 10. Increasing epochs enhances model fitting but may cause overfitting. Training time is proportional to epoch count.

- Single Step Operation Amount: Number of images processed per training step (batch size). Default is 1. Within reasonable ranges, batch size is directly proportional to memory/VRAM usage and inversely proportional to training time.

- Learning Rate: Optional parameter determining model parameter update step size. Excessively high rates cause parameter fluctuations; low rates slow convergence. Uses built-in start rate if unspecified.

- Training Log Path: Specifies storage path for training logs. Requires an empty folder; multiple subfolders will be generated.

- Load Pretrained Model: Enables transfer learning using pre-trained models (.sdm). Note: Requires consistent data size, categories, and purpose between training sessions.

- Processor Type: Choose between Central Processing Unit (CPU) or Graphics Processing Unit (GPU). GPU accelerates computation.

- GPU Number: Specifies GPU IDs for processing when multiple GPUs exist. Default is 0. For multi-GPU training, separate IDs with commas (e.g., 1,3).

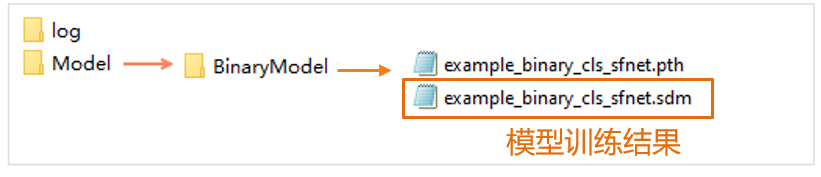

- Result Data: Sets model storage path and name.

Related Content

Machine Learning Environment Configuration

Machine Learning Environment Configuration