Model Training

Feature Description

The purpose of model training is to perform neural network training using generated training data. Through iterative model evaluation based on set parameters, an applicable neural network model can be obtained.

The system incorporates open-source frameworks like PyTorch and PaddlePaddle to train machine learning or deep learning models with different dataset categories. The entire training procedure adopts multiple epochs to optimize model performance. Pretrained models based on large-scale foundational training data are utilized to reduce training duration, while hyperparameter tuning (e.g., Batch Size) enhances both training efficiency and accuracy.

Feature Entry

- Toolbox->Machine Learning->Video Analysis->Model Training

Parameter Description

- Training Data Path: Select the sample library folder generated through managed image samples.

- Model Algorithm: Choose appropriate model algorithms according to application scenarios.

- Training Times (Epochs): Number of training iterations, default is 10. Increased epochs improve model fitting but may cause overfitting. The value should be selected based on requirements, as training time increases proportionally with epochs. Maximum allowed epochs is 300.

- Training Image Size: Set image dimensions for training, default 640. Larger images improve detection accuracy but reduce recall rate. The specified size must be multiples of 32.

- Batch Size: Number of images processed per training step, default 0. Within reasonable ranges, larger batch sizes increase memory consumption but reduce training time. Mandatory specification required for YOLOv7 algorithm.

- Load Pretrained Model: When enabled, select previous training logs as pretrained model paths for incremental training. Note: Consistent data dimensions, categories, and purposes are required between training sessions.

- Model Storage Path: Set output path for trained models.

- Model Name: Specify model identifier.

- Datasource: Configure datasource for storing attribute tables and imagery data.

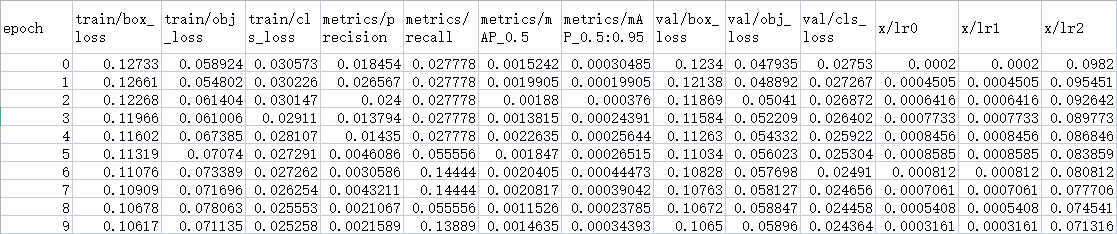

- Table: Output attribute table containing training results. A .csv file recording model accuracy metrics will be generated and converted to attribute table format.

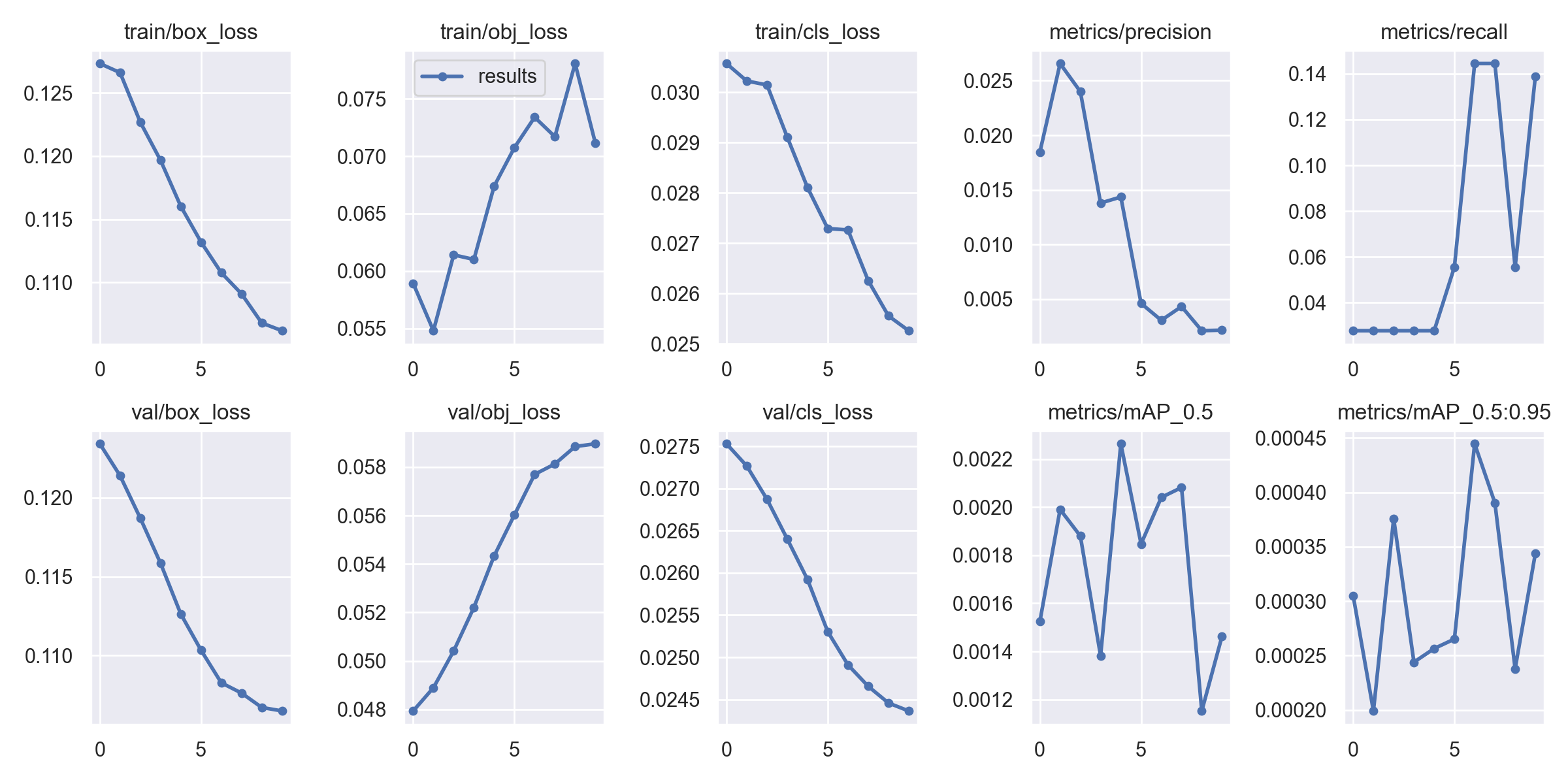

- Image: Output training visualization. Results will be presented as charts as shown:

Related Topics

Video Analysis Environment Configuration