The video analysis and machine learning features in SuperMap iDesktopX require Python environment and scripts to function. Since these environment packages occupy significant disk space, they are not included in the base product package. To use video analysis and machine learning features, users need to download extension packages and perform simple environment configurations.

Recommended configurations for video analysis:

- For scenarios with over 10 video streams (5-second detection intervals per stream), where CPU has the most significant performance impact:

- CPU: 2× Intel(R) Xeon(R) Gold 5218 CPU @ 2.30GHz (16 cores, 32 threads)

- RAM: 32GB

- Graphics Card: RTX 2060

- Storage: SSD

- For scenarios with under 10 video streams requiring real-time analysis (detection + tracking), where GPU has the most significant impact followed by CPU and RAM:

- CPU: 1× Intel(R) Xeon(R) Gold 5218 CPU @ 2.30GHz (16 cores, 32 threads) or i9 12900k for PyTorch models

- RAM: 128GB (64GB for Windows)

- Graphics Card: RTX 3090 or RTX 3090TI

- Storage: SSD (capacity depends on saved video size)

Recommended GPU configurations for machine learning:

- NVIDIA graphics card

- VRAM ≥10GB (minimum 6GB; ≥8GB required for object extraction or SegmentAnything models)

- Latest graphics drivers

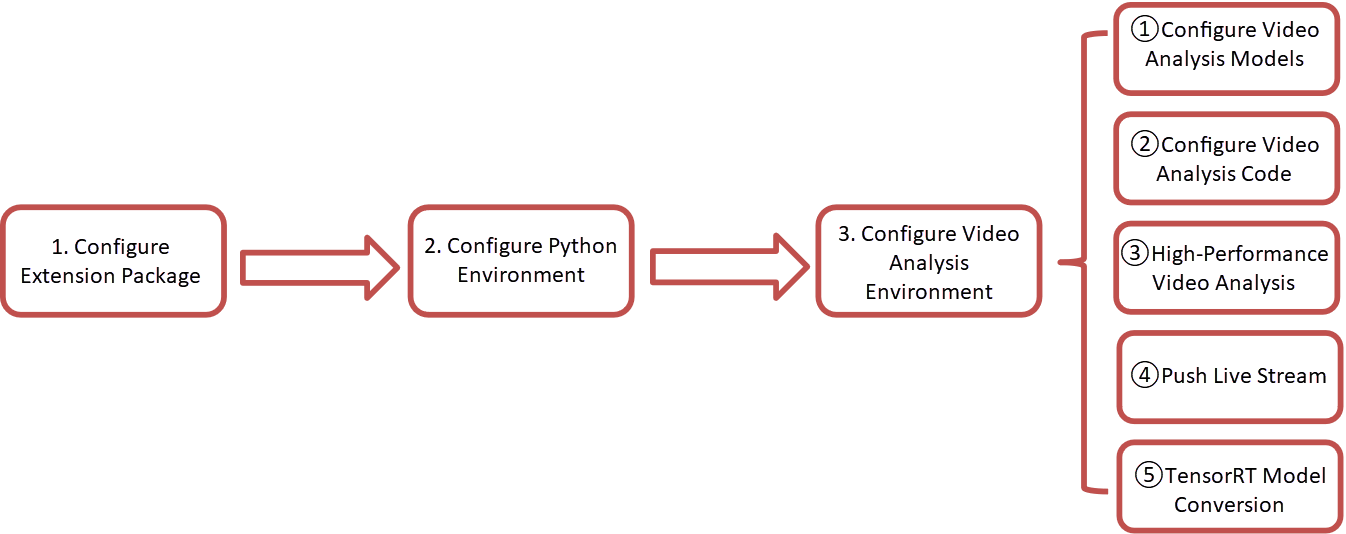

Environment configuration workflow:

The configuration process is shown in the following flowchart. Steps ③, ④, and ⑤ in video analysis configuration are optional based on actual requirements.

1. Configure Extension Package

Obtain Extension Package

Download the SuperMap iDesktopX Extension_AI for Windows package (hereafter "extension package") corresponding to your SuperMap iDesktopX version from SuperMap Official Website or Tech Hub.

The extension package contains:

- resources_ml: Machine learning resources including sample data and model configuration files

- support:

- MiniConda: Runtime environment for AI analysis

- templates: Video effects resources

Configure Extension Package

Copy the resource_ml, support, and templates folders to the root directory of the product package. Ensure the path contains no Chinese characters.

2. Configure Python Environment

- Open SuperMap iDesktopX, click Start tab -> Browse group -> Python button to open Python Window below the map.

- Click Python Environment Manager button in the Python Window toolbar.

- Click Add Exist Environment in the dialog.

- Select python.exe at [Product Package Root]/support/MiniConda/conda/python.exe. Conda path will be auto-detected.

- Click OK in the dialog. After environment loading completes, click OK in Python Environment Manager.

- When prompted "The Python environment has been switched. Do you want to restart the Python process immediately?", click Yes to complete configuration.

3. Configure Video Analysis Environment

Configure Video Analysis Models

- Download video analysis models matching your product version from: https://pan.baidu.com/s/1WZYDn6RJGsu05Gj-5vZZzQ?pwd=n4kd (Extraction code: n4kd)

- Copy the downloaded video-detection folder to [Product Package Root]/support

Configure Video Analysis Code

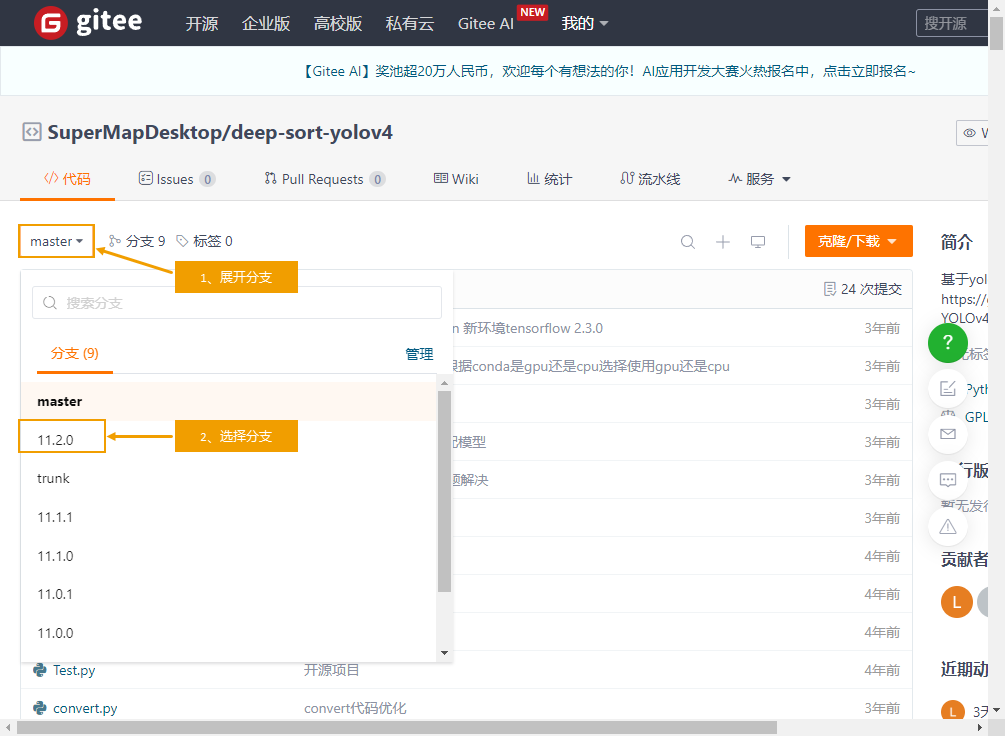

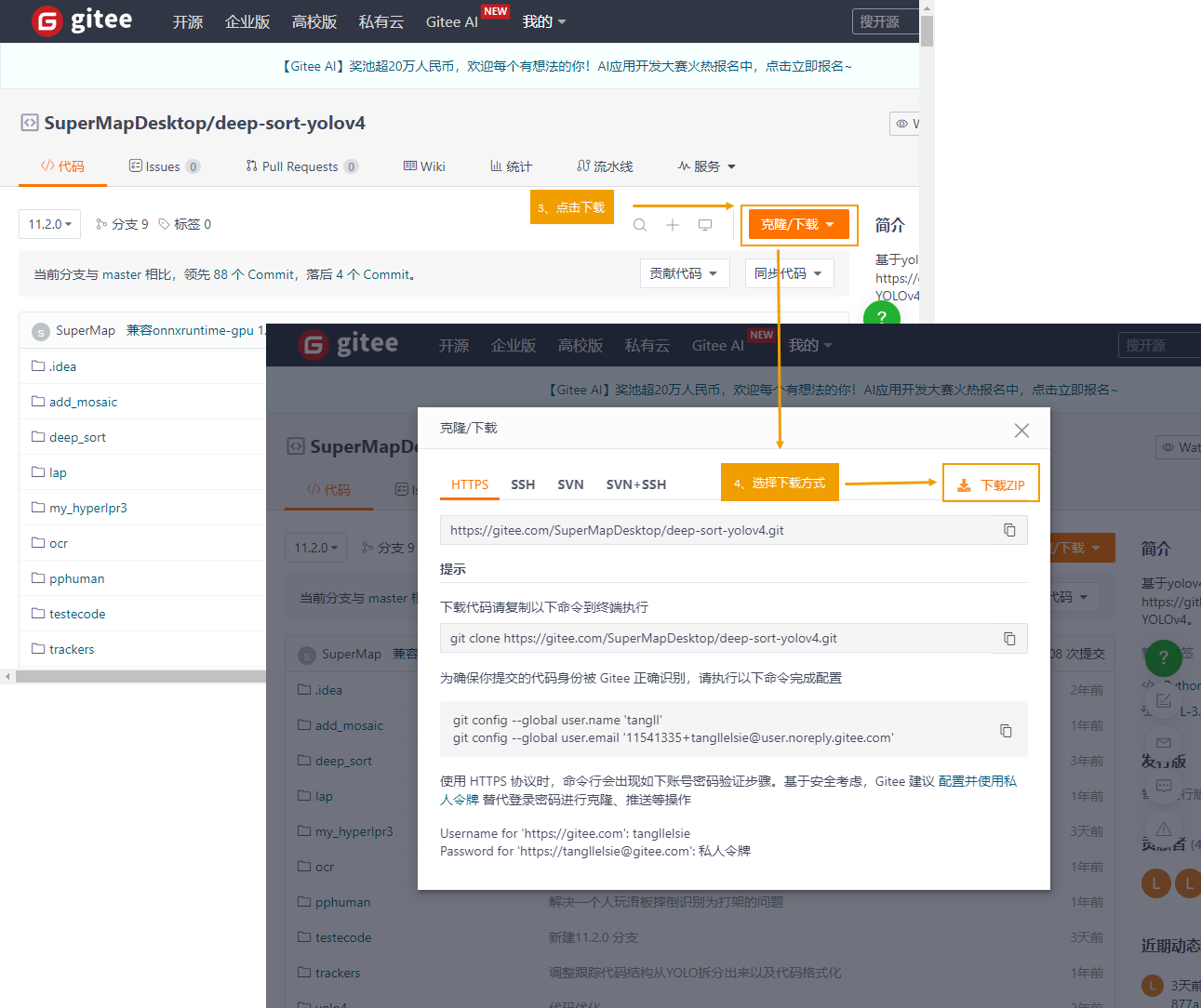

- Download version-matched code package from https://gitee.com/SuperMapDesktop/deep-sort-yolov4

- Copy contents from deep-sort-yolov4-x (x denotes version number, e.g., deep-sort-yolov4-11.2.0) to [Product Package]/support/video-detection/deep-sort-yolov4

High-Performance Video Analysis Environment

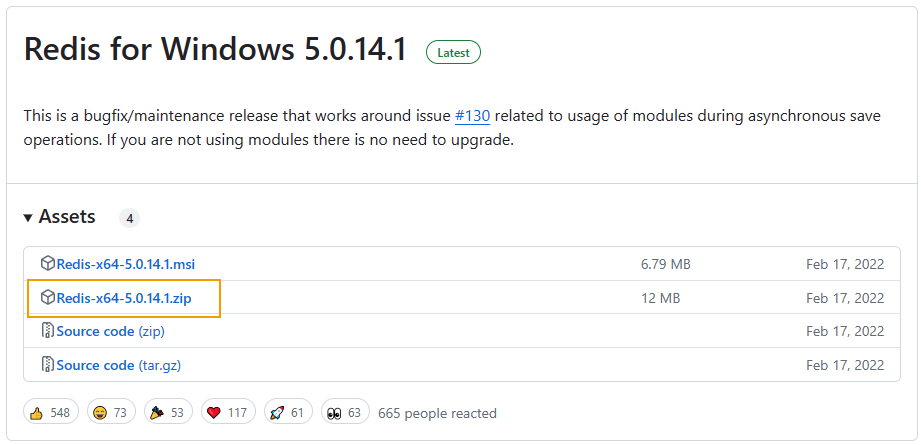

To enhance detection performance, configure Redis environment. Ensure Redis is running before using high-performance detection:

- Download Redis and extract files

- Launch redis-server.exe from Redis package directory

- Modify HighPerformanceDetection="false" to "true" in [iDesktopX Package]/configuration/Desktop.Parameter.xml

- Launch SuperMap iDesktopX

Push Live Stream

To stream analysis results for web viewing, additional configuration required:

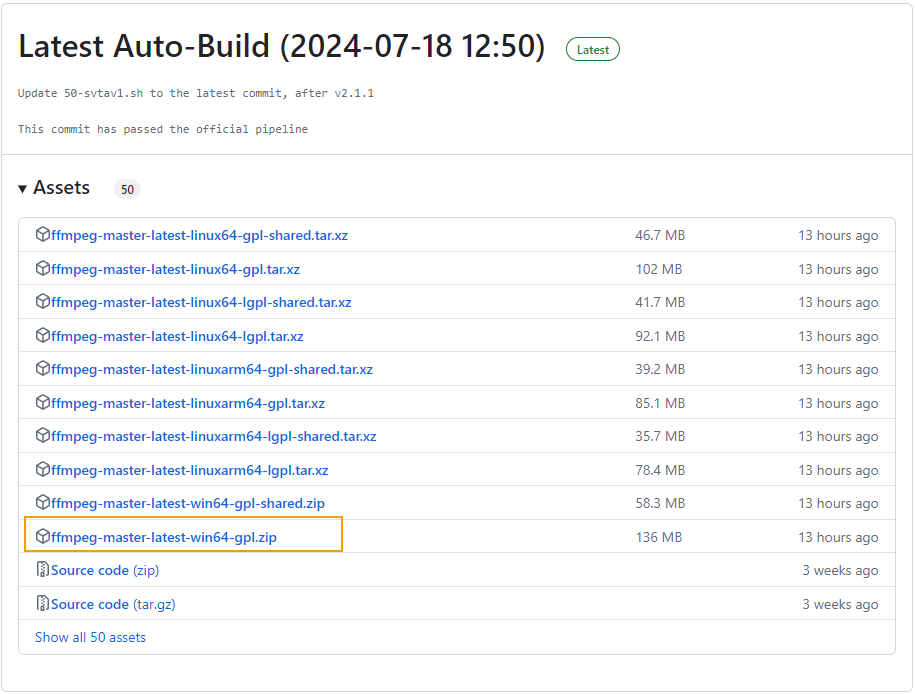

- Download ffmpeg from https://github.com/BtbN/FFmpeg-Builds/releases

- Copy ffmpeg.exe to [Product Package Root]/support/video-detection/Tools/

TensorRT Model Conversion

SuperMap iDesktopX supports converting YOLOv5 PyTorch models to TensorRT format (Windows 10 only):

- Install Miniconda3 (Python 3.8) from https://docs.conda.io/en/latest/miniconda.html

- Download and extract TensorRT package from https://developer.nvidia.com/nvidia-tensorrt-8x-download

- Run Miniconda3 as administrator

- Execute: conda activate [Conda Path] (path to AI extension package)

- In TensorRT directory, install components sequentially:

cd .\graphsurgeon\ pip install .\graphsurgeon-0.4.6-py2.py3-none-any.whl cd ../ cd .\uff\ pip install .\uff-0.6.9-py2.py3-none-any.whl cd ../ cd .\onnx_graphsurgeon\ pip install .\onnx_graphsurgeon-0.3.12-py2.py3-none-any.whl cd ../ cd .\python\ pip install .\tensorrt-8.6.1-cp38-none-win_amd64.whl - Copy all DLL files from TensorRT/lib to [Product Package Root]/support/video-detection/TensorRT/