SuperMap iPortal AI Assistant supports rapid deployment using Docker Compose. Docker Compose is a tool for defining and running multi-container Docker applications. Through YAML files, it describes application services and their dependencies, enabling one-click startup, shutdown, and management of containerized application environments.

Preparing Image Packages

Before deploying with Docker Compose, prepare the required images. Both online and offline installation methods are supported:

Offline Installation

Obtain image packages from the network disk. Both X86 and ARM versions are available. Extract the supermap-iportal-ai-assistant-2025-linux-x64-images.tar.gz package (X86 example). Required images include:

- agentx-auto-agent service image

- iportal-ai-assistant service image

- iportal-ai-assistant-server service image

Install images using:

docker load -i iportal-ai-assistant-12.0.0.0-amd64.tar

docker load -i iportal-ai-assistant-server-12.0.0.0-amd64.tar

docker load -i agentx-auto-agent-12.0.0.0-amd64.tar

Online Installation

1. Log in to Alibaba Cloud with password: supermap@123

docker login --username=478386058@qq.com

registry.cn-chengdu.aliyuncs.com2. Pull required images:

docker pull registry.cn-chengdu.aliyuncs.com/supermap/agentx-auto-agent:12.0.0.0-amd64

docker pull registry.cn-chengdu.aliyuncs.com/supermap/iportal-ai-assistant:12.0.0.0-amd64

docker pull registry.cn-chengdu.aliyuncs.com/supermap/iportal-ai-assistant-server:12.0.0.0-amd64

Modifying Configuration Files

Obtain configuration files from the docker-compose folder in the network disk and modify as needed:

| File Name | Description |

|---|---|

| .env | Environment variables configuration |

| application-app.yml | AI Assistant Server configuration |

| docker-compose.yaml | Docker Compose configuration |

| graphrag/.env | GraphRAG environment variables |

.env File Configuration

# Common settings

...

## Host CPU architecture

ARCH=amd64

## License service

BSLICENSE_SERVER=ws://172.16.168.199:9183

...

## iPortal service

IPORTAL_SERVER=http://172.16.168.198:8190

IPORTAL_URL=http://172.16.168.198:8190/iportal

# openai-format LLM configuration

DEFAULT_LLM_MODEL=Qwen/Qwen3-14B

LLM_SERVER=http://127.0.0.1:8000/v1

LLM_KEY=EMPTY

LLM_MODEL=Qwen/Qwen3-14B

# openai-format embedding model configuration

DEFAULT_EMBED_MODEL=bge-m3

EMBED_SERVER=http://127.0.0.1:8001/v1

EMBED_KEY=EMPTY

EMBED_MODEL=bge-m3Modify bolded values in .env file as needed:

- ARCH: Host CPU architecture (amd64 for X86-64/X64/AMD64, arm64 for ARM64)

- BSLICENSE_SERVER: License center address obtained after Web License installation

- IPORTAL_SERVER: iPortal root address (http://[IP]:8190)

- IPORTAL_URL: iPortal access address (http://[IP]:8190/iportal)

- DEFAULT_LLM_MODEL: Default LLM name when not specified

- LLM_SERVER: LLM service address (http://[IP]:8000/v1)

- LLM_KEY: LLM access key (optional)

- LLM_MODEL: LLM name

- DEFAULT_EMBED_MODEL: Default embedding model name when not specified

- EMBED_SERVER: Embedding model service address (http://[IP]:8001/v1)

- EMBED_KEY: Embedding model access key (optional)

- EMBED_MODEL: Embedding model name

application-app.yml File Configuration

...

iPortal:

api:

addr: http://172.16.168.198:8190/iportal

...

spring:

ai:

openai:

chat:

enabled: true

base-url: http://127.0.0.1:8000

options:

model: Qwen/Qwen3-14BModify bolded values in application-app.yml:

- iPortal.api.addr: iPortal access address

- spring.ai.openai.chat.enabled: Enable LLM service (default: true)

- spring.ai.openai.chat.base-url: LLM service address

- spring.ai.openai.chat.options.model: LLM name

graphrag/.env File Configuration

...

### OpenAI alike example

LLM_BINDING=openai

LLM_MODEL=Qwen/Qwen3-14B

LLM_BINDING_HOST=http://127.0.0.1:8000/v1

LLM_BINDING_API_KEY=EMPTY

### Embedding Configuration

EMBEDDING_MODEL=bge-m3

EMBEDDING_DIM=1024

EMBEDDING_BINDING_API_KEY=EMPTY

### OpenAI alike example

EMBEDDING_BINDING=openai

EMBEDDING_BINDING_HOST=http://127.0.0.1:8001/v1

...Modify bolded values in graphrag/.env file:

- LLM_MODEL: LLM name

- LLM_BINDING_HOST: LLM service address

- LLM_BINDING_API_KEY: LLM access key

- EMBEDDING_BINDING_HOST: Embedding model service address

- EMBEDDING_BINDING_API_KEY: Embedding model access key

Starting SuperMap iPortal AI Assistant Service

After configuration, execute this command in the docker-compose.yaml directory:

docker compose up -d

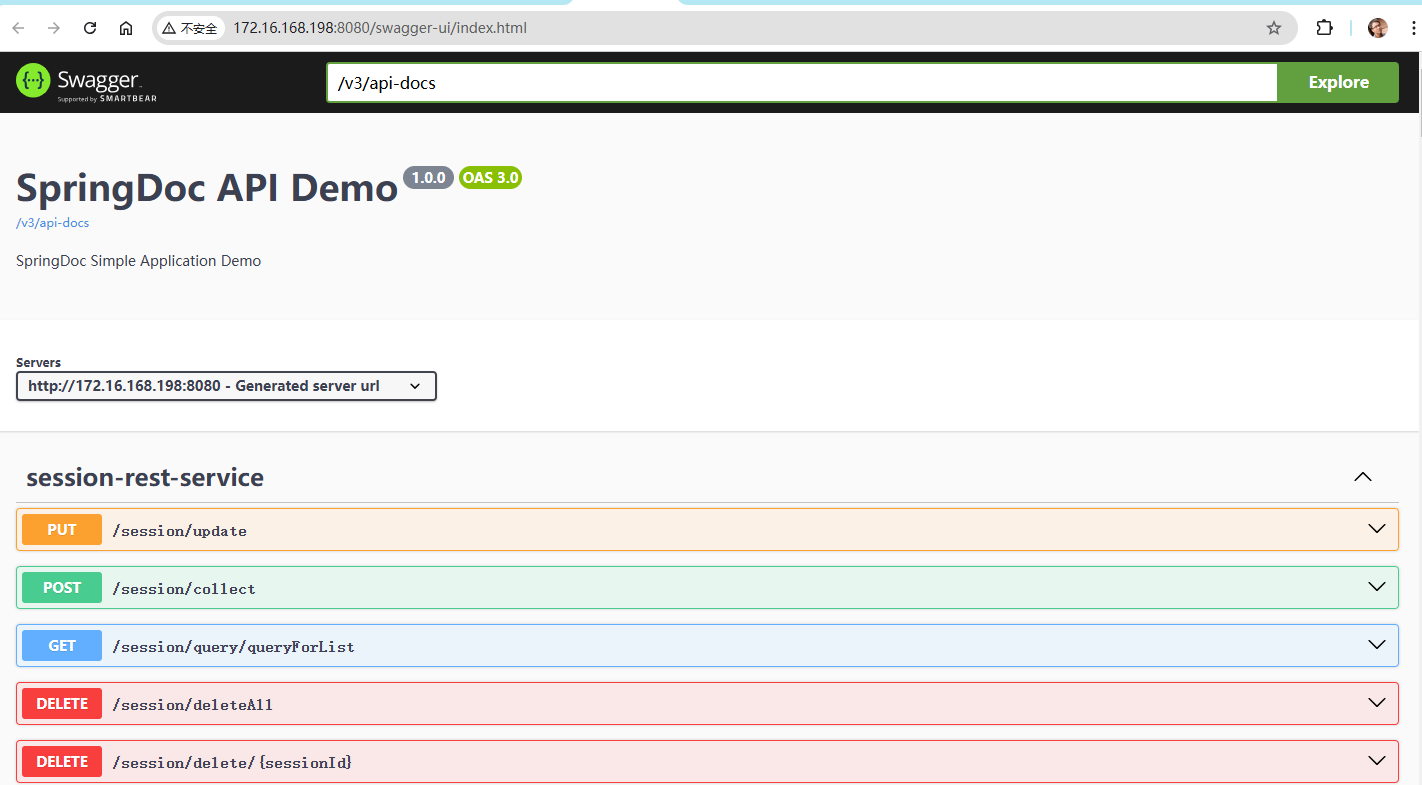

- AI Assistant API UI: http://[IP]:8080/swagger-ui/index.html

|

| Figure: SuperMap iPortal AI Assistant API UI |

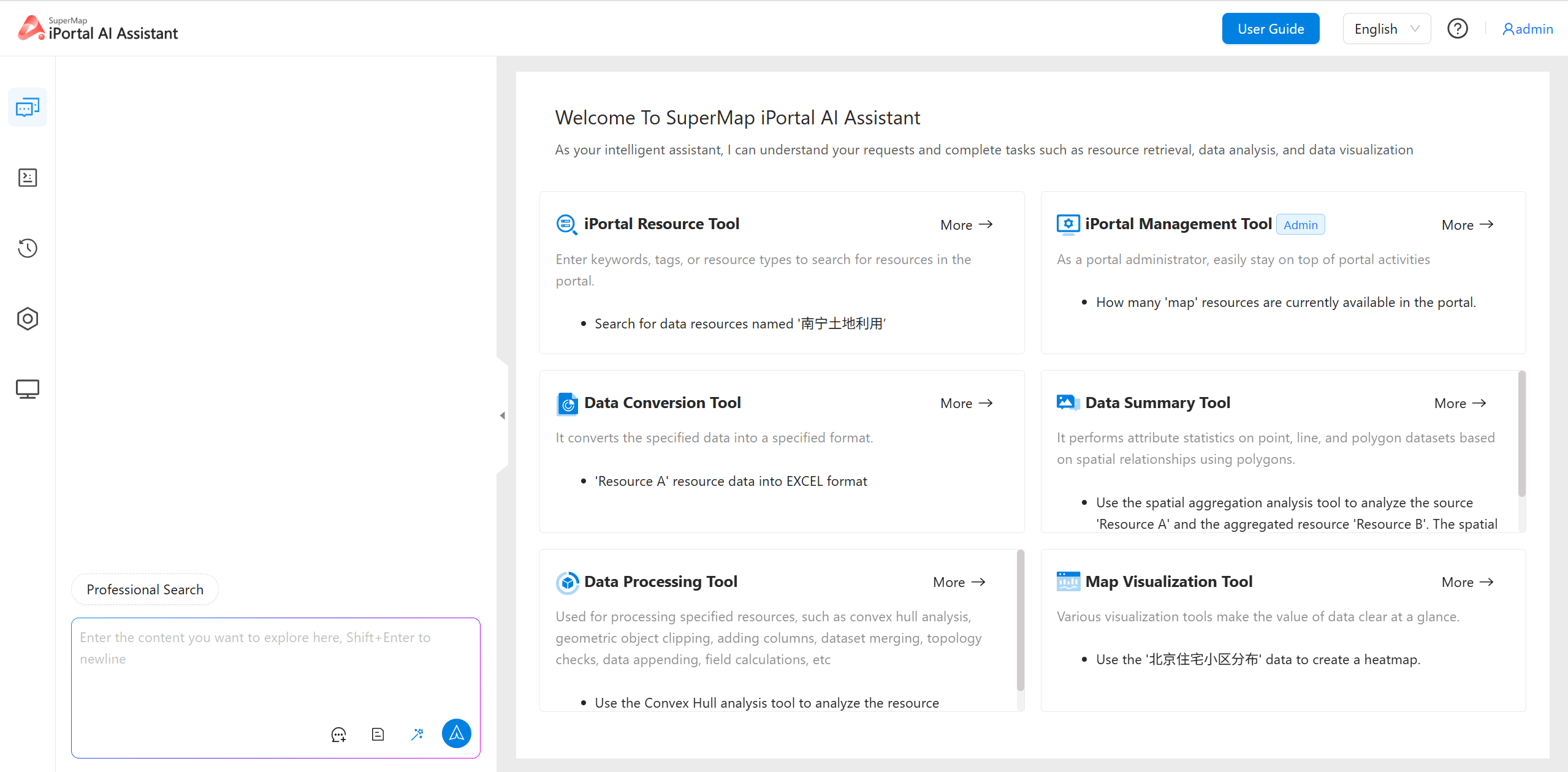

- AI Assistant Homepage: http://[IP]/iportal/apps/AIAssistant/index.html

Log in with SuperMap iPortal credentials to access the AI Assistant.

|

| Figure: SuperMap iPortal AI Assistant Homepage |

Uninstalling AI Assistant Service

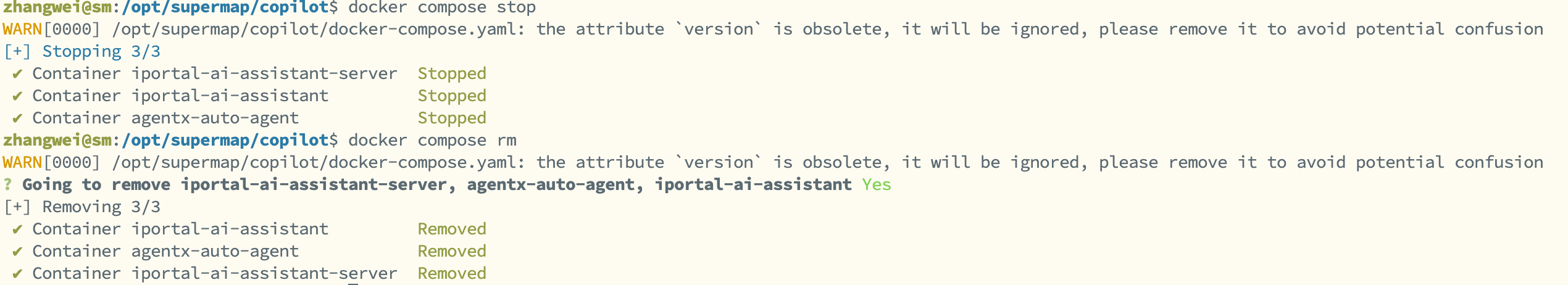

To uninstall Docker Compose deployment:

docker compose stop

docker compose rm

|

| Figure: SuperMap iPortal AI Assistant Uninstalled |

Remote Sensing Tools Supplement

Additional configuration for remote sensing capabilities:

Configuring Python Environment

Download Python environment package (supermap-iobjectspy-env-cpu.zip) from "Component GIS" section of official resources center. Extract the conda folder to {iServer_home}/support/python.

Configuring Machine Learning Resources

Download ML resource package (supermap-iobjectspy-resources-ml.zip) and extract resources-ml folder to {iServer_home}/support/python. Contains sample data/models.

- Required for deep learning features: binary classification, object detection, etc.

- ML services don't support Web License; use standard or cloud licenses.

Configuring Remote Sensing Package

Download Remote Sensing Capability Extension Package from network disk:

- Place iportal-gpa-extension-1.0.0-SNAPSHOT.jar in {iServer_home}/support/geoprocessing/lib

- Extract model folder to {iServer_home}/webapps/iserver

Commercial models require separate authorization:

| Model Name | Authorization ID |

|---|---|

| Land Cover Pre-trained Model (LIM_LULC) | 33001 |

| Building Extraction Model (PIM_BUILDING) | 33101 |

| Building Change Detection Model | 33109 |

| Road Extraction Model | 33107 |