GIS Cloud Suite

FAQ

-

How to solve the problem when the database in Oracle could not connect with SuperMap iDesktop?

Answer: Please create a new database in Oracle XE, and use the new one. Follow the steps below to create a new database:

(1) Clicks Database > Oracle > Name(The name of your database) > oracle > Command Pad on the iManager to enter Oracle comnmand pad.

(2) Create a folder named ‘supermapshapefile’ under the ‘u01/app/oracle/oradata’ directory(If you already have a folder, skip this step). Execute:

mkdir -p /u01/app/oracle/oradata/supermapshapefile(3) Change the folder owner to oracle. Execute:

chown oracle /u01/app/oracle/oradata/supermapshapefile(4) Enter Oracle. Execute:

sqlplus / as sysdba(5) Fill in the username and password of Oracle. You can view account information by clicking Database > Oracle > Name > Account on the iManager page.

(6) Create a tablespace, the size is 200M(you can set the size of tablespace according to your requirement). Execute:

create tablespace supermaptbs datafile '/u01/app/oracle/oradata/supermapshapefile/data.dbf' size 200M;(7) Create a new user of the tablespace. For example, username: supermapuser; password: supermap123. Execute:

create user supermapuser identified by supermap123 default tablespace supermaptbs;(8) Grant new user permission. Execute:

grant connect,resource to supermapuser; grant dba to supermapuser; -

The Spark-worker node sometimes get offlined sometimes get death when doing the distributed analyst by Spark cluster, how to solve it?

Answer: Because of the high resource occupancy in the processing of distributed analyst, the response is getting slower. The Spark-master node can not receive the response of Spark-worker node, so the Spark-master node considers the Spark-worker node is in some problems. You need to extend the heartbeat interval between the nodes.

(1) Clicks on Computing Resources > Spark Cluster > Console > spkar-mater > Command pad to enter the command pad of the Spark-master container.

(2) Go to the spark-env.sh file directory:

cd spark/conf(3) Open the spark-env.sh file:

vi spark-env.sh(4) Add the heartbeat interval in the file(The default is 180 seconds. The example below sets to 1800 seconds.):

export SPARK_MASTER_OPTS="-Dspark.worker.timeout=1800"(5) Save and exit spark-env.sh file.

(6) Redeploy Spark-master node and Spark-worker node on the Spark Cluster Console page.

-

Why the font style displayed in map service is different from the style used in making map?

Answer: the service node which the map service is running on does not have the font style used in making map. Please follow the steps to add font style:

(1) Clicks on File Management on the left navigation bar, upload the font file to the system/fonts folder.

(2) Redeploy the service node which the map service is running on.

-

If you redeploy or adjust spec immediately after deploying or adjusting spec, the license assign failed, how to solve the problem?

Answer: After redeploying or adjusting spec, please make sure the services have been assigned the license successfully, then do others operations like redeploy or adjust spec.

-

When viewing monitoring statistic charts, the charts do not displaying data or the time of data can not match the real time, how to solve the problem?

Answer: Please make sure the time settings of local machine and Kubernetes node machines are same.

-

If you re-enabled built-in environments(such as Spark Cluster and Kafka cluster) after disabling, the built-in environments were not working, how to solve the problem?

Answer: The service ports would change after re-enabling the built-in environments, please update related service port configurations.

-

When installing GIS Cloud Suite on K3S, the progress bar was stoping running, how to solve the problem?

Answer: Check the status of pods by the command

kubectl -n kube-system get pod, if the status of pods are not ‘running’ or ‘completed’, the cluster of K3S might not communicate because of the firewall. Input the following command to close firewall:systemctl stop firewalld systemctl disable firewalldAfter closing firewall for a while, use the command

kubectl get pod --all-namespacesto check the status of pods, if the status of pods are stilling abnormal, please consider others reason. -

How to replace the security certificate in GIS Cloud Suite?

Answer: There are two kinds of security certificates in iManager for K8s, one is for security center(Keycloak), another one is for access entrance. The method of replacing the security certificates is different according to whether the access entrance or Keycloak has configured the domain name. Please follow the steps below to replace the security certificates:

Replace the Security Certificate for Keycloak(Without domain name)

(1) Executes the following command on Kubernetes Master machine to find the volume of the security certificate(

icloud-native-<id>in the command is the namespace of GIS Cloud Suite, please replace to the actual namespace):kubectl -n icloud-native-<id> describe pvc pvc-keycloak-certificate-<id> | grep Volume: | awk -F ' ' '{print $2}' | xargs kubectl describe pv(2) Stores your new security certificate to the volume in step (1);

Notes:

The security certificate includes certificate and private key, the certificate should be renamed to tls.crt, the private key should be renamed to tls.key.(3) Logs in to iManager and redeploys the Keycloak service in Basic Services.

Replace the Security Certificate for Keycloak(With domain name)

(1) Copies the security certificate and pastes to the Kubernetes Master machine.

(2) Executes the following commands(

<id>in the command is the ID of namespace when creating GIS Cloud Suite;<certificateFile>is the name of certificate;<privateFile>is the name of private key. Replace them by the actual namespace):kubectl -n icloud-native-<id> delete secret keycloak-ingress-tls kubectl -n icloud-native-<id> create secret tls keycloak-ingress-tls --cert='<certificateFile>' --key='<privateFile>'(3) Visits Keycloak by the domain name.

Replace the Security Certificate for Access Entrance(Without domain name)

(1) Executes the following command on Kubernetes Master machine to find the volume of the security certificate(

icloud-native-<id>in the command is the namespace of GIS Cloud Suite, please replace to the actual name):kubectl -n icloud-native-<id> describe pvc pvc-gateway-certificate-<id> | grep Volume: | awk -F ' ' '{print $2}' | xargs kubectl describe pv(2) Stores your new security certificate to the volume in step (1);

Notes:

The security certificate should be renamed to certificate.keystore.(3) Modifies the certificate configuration of access entrance:

kubectl -n icloud-native-<id> edit deploy iserver-gatewayFind the configuiration of icn_ext_param_server_ssl_keyStorePassword in the file, modify the ‘value’ to the password of the security certificate. Save and quite the configuration, the service of access entrance will redeploy automatically.

Replace the Security Certificate for Access Entrance(With domain name)

(1) Copies the security certificate and pastes to the Kubernetes Master machine.

(2) Executes the following commands(

<id>in the command is the ID of namespace when creating GIS Cloud Suite;<certificateFile>is the name of certificate;<privateFile>is the name of private key. Replace them by the actual name):kubectl -n icloud-native-<id> delete secret gateway-ingress-tls kubectl -n icloud-native-<id> create secret tls gateway-ingress-tls --cert='<certificateFile>' --key='<privateFile>'(3) Visits access entrance by the domain name.

-

How to solve the problem if the data lost after restarting GIS Cloud Suite when your did not configure NFS Server or StorageClass?

Answer: If you can not see the IP address of Distributed Analysis Service, or no data in built-in Storage Resources(PostGIS, PostgreSQL, etc.) after restarting GIS Cloud Suite, please disable the built-in environment, then enable it again.

-

How to solve the problem that some of funcions are unavailable after updating the image of Gisapplication or iPortal?

Answer: If you only update Gisapplication or iPortal, the configuration and code might be incompatible. Please update both Gisapplication and iPortal.

-

How to solve the problem when Keycloak has the error ‘io.undertow.util.ParameterLimitException: UT000047: The number of parameters exceeded the maximum of 1000’?

Answer: The error caused because of you have too many service instances, the table fields that send to Keycloak exceeded the maximum value 1000, you need to raise the maximum value. please follow the steps below to solve the problem:

(1) Log in to the management page of Kubernetes.

(2) Click on Deployments in the namespace of your GIS Cloud Suite.

(3) Find out ‘keycloak’, click on Actions > View/edit YAML.

(4) Add the variable ‘UNDERTOW_MAX_PARAMETERS’ in spec->template->spec->containers->env, the value should larger than the value from the error. For example:

{ "name": "UNDERTOW_MAX_PARAMETERS", "value": "1500" }, -

How to create the resource with the same name as the Secret when configuring the image pull secret?

Answer: When configuring the Secret, you need to create a resource with the same name as the Secret in GIS Cloud Suite namespace of Kubernetes. You also need to create a resource with the same name as the secret value in namespace ‘kube-system’ when enabling metrics server. Please enter the following command in Kubernetes Master machine to create the resource:

kubectl create secret docker-registry <image-pull-secret> --docker-server="<172.16.17.11:5002>" --docker-username=<admin> --docker-password=<adminpassword> -n <giscloudsuite>Notes:

The contents in the command with symbol ”<>” need to be replaced according to your actual environment(Delete the symbol ”<>” after replacing).

<image-pull-secret>is the name of your Secret;<172.16.17.11:5002>is your registry address;<admin>is the namespace of your registry;<adminpassword>is the password of your namespace;<giscloudsuite>is the namespace of GIS Cloud Suite(replace<giscloudsuite>to ‘kube-system’ when you create the resource in the namespace kube-system). -

How to configure automatic refresh for service instances?

Answer: Refresh service instances are used for updating service instances when datasources have updated. If you configure automatic refresh, the system will update service instances every certain time automatically. Please follow the steps below to configure automatic refresh in the YAML file of service node:

(1) Find out which service node that the service instance is running on(You can see the service node by clicking on Service Management > Service Instances, and entering the service instances details page).

(2) Login to the management page of Kubernetes, click on Deployments in the namespace of your GIS Cloud Suite, and find out the name of service node.

(3) Edit the service node, click on Actions > View/edit YAML.

(4) Add the variable ‘REFRESH_DATASOURCE’ and ‘CHECK_DATASOURCE_CONNECTION_INTERVAL’ in spec->template->spec->containers->env. ‘REFRESH_DATASOURCE’ is used for enabling automatic refresh, ‘CHECK_DATASOURCE_CONNECTION_INTERVAL’ is used for setting time interval. For example, enable automatic refresh and set the interval time for 1 hour:

{ "name": "REFRESH_DATASOURCE", "value": "true" }, { "name": "CHECK_DATASOURCE_CONNECTION_INTERVAL", "value": "3600" },(5) Click on UPDATE and wait the service redeploy.

-

How to solve the problem that the Kubernetes cluster in Alibaba Cloud can not connect to built-in HBase in GIS Cloud Suite?

Answer: Please follow the steps below to solve the problem:

(1) Make sure which Work node that the EIP bind on Kubernetes cluster in Alibaba Cloud, execute the following command to get the name of Work node:

kubectl get nodes(2) Update service, execute:

kubectl -n <namespace> patch deployment/nginx-ingress-controller -p " apiVersion: apps/v1 kind: Deployment metadata: name: nginx-ingress-controller spec: template: spec: nodeName: <nodeName> "(3) Wait for the service to start.

Notes:

Replace<namespace>and<nodeName>in step (2) to the actual value of your environment.<namespace>is the namespace of iManager(or GIS Cloud Suite).<nodeName>is name of work node in Kubernetes cluster that EIP bind on.

-

How to configure local storage for built-in HBase environment?

Answer: The NFS volume will impact the write/read ability of HBase, you can optimize the ability by the following way:

(1) Modify the value of

deploy_disable_hbase_nfs_volumetotruein the configuration(values.yaml).deploy_disable_hbase_nfs_volume: true(2) Please refer to the hbase-datanode-local-volume.yaml file, and modify the file according to the actual situation:

apiVersion: v1 kind: PersistentVolume metadata: labels: type: icloud-native name: icloud-native-hbase-datanode-volume-0 #Point 1 spec: storageClassName: local-volume-storage-class capacity: storage: 10Ti accessModes: - ReadWriteMany local: path: /opt/imanager-data/datanode-data #Point 2 persistentVolumeReclaimPolicy: Delete nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: - node1 #Point 3The places need to be modified are marked by ’#’ on the above:

- Point 1: Specify the name of the PV.

-

Point 2: The actual path to store the HBase data, please create the directory first. If you want to create multiple PVs on one node, please create multiple directories and modify the paths to the different directories.

Use the command below to create directory on the node(replace the path by your actual setting):

mkdir -p /opt/imanager-data/datanode-data - Point 3: Fill in the name of the node of Kubernetes(the node should be available to scheduling).

(3) Execute the command on the Kubernetes master node after modifying:

kubectl apply -f hbase-datanode-local-volume.yamlNotes:

- The number of PVs should be the same as the number of dataNodes in HBase environment, the default is 3. If you scaled the node, please created the the same number of PVs.

- The PV could be created on any node of Kubernetes(specified by #Third), recommended to create on different nodes.

- The PV could be created before opening/scaling the HBase, or after opening/scaling the HBase.

-

How to solve the problem that the service instances which were published by built-in HBase, PostGIS, and PostgreSQL could not visit after you use the recovery function in GIS Cloud Suite?

Answer: After all the built-in services are available, refresh the related service instances.

-

How to solve the error “from origin ‘http://{ip}:{port}/’ has been blocked by CORS Policy: NO ‘Access-Control-Allow-Origin’ header is present not the request resource.” when you access command pad?

Answer: Please follow the steps below to solve the problem:

Chrome v91 Solution

(1) Close Chrome.

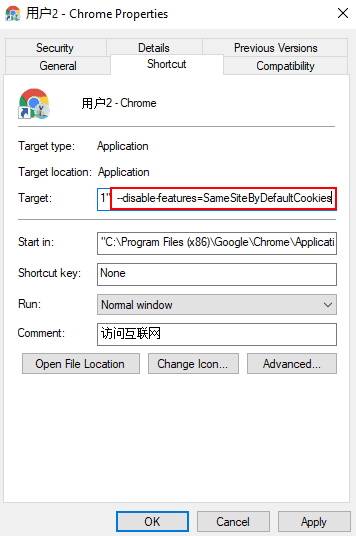

(2) Right click on Chrome and open Properties. Click on Shortcut, enter a few space bar and paste the content ‘—disable-features=SameSiteByDefaultCookies’ or ‘—flag-switches-begin —disable-features=SameSiteByDefaultCookies,CookiesWithoutSameSiteMustBeSecure —flag-switches-end’.

(3) Restart Chrome.

Chrome v80 Solution

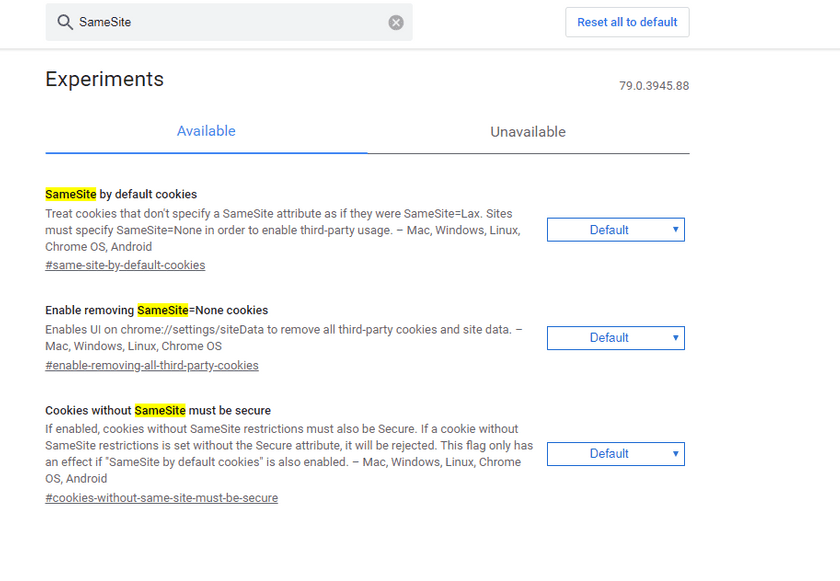

(1) Open Chrome and visit the address ‘chrome://flags’.

(2) Search the key word ‘SameSite’, and disabled all the results.

(3) Restart Chrome.

-

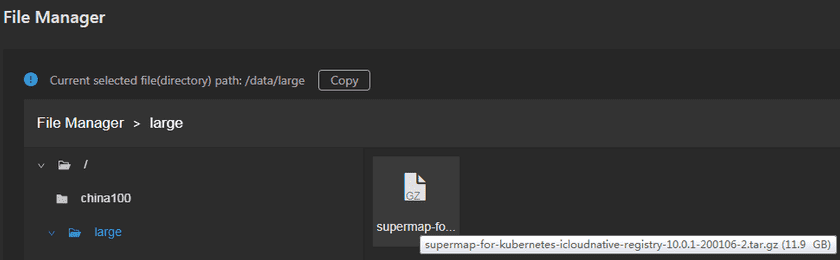

How to solve the problem that the File Manager is stuck in the process of uploading large data files exceeding 10G and the upload fails in GIS Cloud Suite?

Answer: When the File Manager needs to upload large data files exceeding 10G in GIS Cloud Suite, Please follow the steps below to solve the problem:

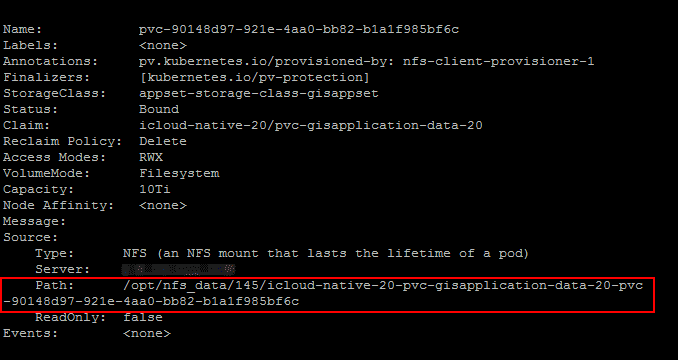

(1) Executes the following command to find the volume(

icloud-native-<id>in the command is the namespace of GIS Cloud Suite;<id>is the ID of namespace when creating GIS Cloud Suite. Please replace to the actual namespace):kubectl -n icloud-native-<id> describe pvc pvc-gisapplication-<id> | grep Volume: | awk -F ' ' '{print $2}' | xargs kubectl describe pvCheck the result and find the volume of File Manager:

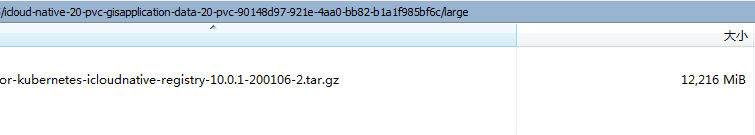

(2) Stores the large data files need uploading to the volume in step (1) for uploading:

Upload completed.

-

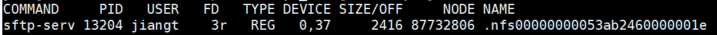

How to solve the problem if the File Manager generates a NFS(.nfsxxxx) file after deleting any file and the deletion of the NFS file fails in GIS Cloud Suite?

Answer: Please follow the steps below to delete the NFS file.

(1) Executes the following command to find the volume of the NFS file(

icloud-native-<id>in the command is the namespace of GIS Cloud Suite;<id>is the ID of namespace when creating GIS Cloud Suite. Please replace to the actual namespace):kubectl -n icloud-native-<id> describe pvc pvc-gisapplication-<id> | grep Volume: | awk -F ' ' '{print $2}' | xargs kubectl describe pv(2) Executes the following command to try deleting the NFS file in the volume:

rm -f(3) If you perform step (2) and fail to delete, the reason is that a process is accessing this file and it is not allowed to delete it. View the process of accessing the file by the lsof command:

lsof .nfs00000000053ab2460000001e #The NFS file that needs to be deleted, please modify according to the actual situation.If you need root permission, it will display the result by the following command:

sudo lsof .nfs00000000053ab2460000001e(4) Kill the access process according to the PID and delete the NFS file:

kill -9 13204 -

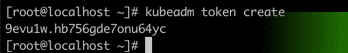

How to solve the problem if the original token is invalid or fail to visit the page of GIS Cloud Suite after GIS Cloud Suite version upgrading?

Answer: Please follow the steps below to solve the problem:

Regenerate token Solution

Executes the following command to regenerate token:

kubeadm token createNotes: This solution is recommended.

Configure environment variable Solution

(1) Log in to the management page of Kubernetes.

(2) Click on Deployments in the namespace of your GIS Cloud Suite.

(3) Find out ‘common-dashboard-api’ and ‘keycloak’, click on Actions > View/edit YAML separately.

(4) Add the variable ‘CIPHER_ALGORITHM’ in spec->template->spec->containers->env separately, the value could be ‘AES/CBC/PKCS5Padding’:

{ "name": "CIPHER_ALGORITHM", "value": "AES/CBC/PKCS5Padding" }, -

How to configure cross-origin for GIS Cloud Suite?

Answer: Please follow the steps below to configure cross-origin for GIS Cloud Suite:

(1) Log in to the management page of Kubernetes.

(2) Click on Deployments in the namespace of your GIS Cloud Suite.

(3) Find out ‘ispeco-dashboard-api’ and ‘iserver-gateway’, click on Actions > View/edit YAML and locate to spec->template->spec->containers->env separately.

(4) Add the variable ‘DISABLE_DEFAULT_CORS_CONFIG’ separately, used for disabling cross-origin configuration. The value is set to ‘false’ by default, meaning enabling cross-origin configuration. If the value is ‘true’, cross-origin configuration will be disabled and there will be cross-origin interception when accessing the URL. For example:

{ "name": "DISABLE_DEFAULT_CORS_CONFIG", "value": "false" },(5) Add the variable ‘CORS_CORSFILTER_INITPARAMS’ separately to configure parameters for cross-origin. The parameters are as follows:

Access-Control-Allow-Origin #Origin of allowed cross-origin Access-Control-Allow-Methods #Allowed request methods,'GET,POST,PUT,DELETE,OPTIONS,HEAD' Access-Control-Allow-Headers #Allowed request headers Access-Control-Allow-Credentials #With cookie information or not,'true' or 'false'Notes: Separate adjacent configuration parameters with the punctuation ”;“.

For example:

{ "name": "CORS_CORSFILTER_INITPARAMS", "value": "Access-Control-Allow-Origin=http://www.baidu.com;Access-Control-Allow-Methods=GET,POST,PUT,DELETE,OPTIONS,HEAD;Access-Control-Allow-Headers=*;Access-Control-Allow-Credentials=true" },(6) Add the variable ‘CORS_URL_PATTERNS’ separately, used for setting URL paths for existing cross-origin interception to match. Setting multiple URL paths is allowed and applies to all URL paths by default.

Notes: Separate adjacent URL paths with the punctuation ”,” when setting multiple URL paths.

For example:

{ "name": "CORS_URL_PATTERNS", "value": "/token,/iserver/services/**" }, -

How to solve the problem if the logs include the information that “The connection to the server localhost:8080 was refused - did you specify the right host or port? Error: Kubernetes cluster unreachable” when deploying GIS Cloud Suite on Kubernetes?

Answer: There are two solutions to solve the above problem, please choose one of the following methods:

Switch into the root user Solution

Executes the following command again after switching into the root user:

sudo ./startup.shIncrease kubectl usage permissions Solution

Executes the following command in any directory of the server:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.kubeconf $HOME/.kube/config sudo chown userName:userName $HOME/.kube/configNotes:

<userName>in the command is your actual username, please replace it. -

How to reset the administrator account and password?

Answer: The file named reset-password.sh in the GIS Cloud Suite deployment package directory is used for resetting the administrator account, please follow the steps to reset the account and password:

(1) Go to the GIS Cloud Suite deployment package directory(the directory in which you executed ./startup command to install GIS Cloud Suite);

(2) Resetting the administrator account, execute reset-password.sh file:

chmod +x reset-password.sh && ./reset-password.sh